What is SEO?

What is SEO?

A simple definition of search engine optimisation in 2015 is that it

is a technical and creative process to improve the visibility of a

website in search engines, with the aim of driving more potential

customers to it.

These free seo tips will help you create a successful seo friendly

website yourself, based on my 15 years experience making websites

rank in Google. If you need optimisation services – see my seo audit.

An Introduction

This is a beginner’s guide to effective white hat seo. I deliberately

steer clear of techniques that might be ‘grey hat’, as what is grey

today is often ‘black hat’ tomorrow, as far as Google is concerned.

No one page guide can explore this complex topic in full. What you’ll

read here is how I approach the basics – and these are the basics – as

far as I remember them. At least – these are answers to questions I had

when I was starting out in this field. And things have changed since I

started this company in 2006.

The ‘Rules’

Google insists webmasters adhere to their ‘rules’ and aims to reward sites with

high quality content and

remarkable ‘white hat’ web marketing techniques with

high rankings. Conversely it also

needs to penalise web sites that manage to rank in Google by breaking these rules.

These rules are not laws, only guidelines, for ranking in Google; laid down

by Google.

You should note that some methods of ranking in Google are, in fact,

actually illegal. Hacking, for instance, is illegal.

You can choose to follow and abide by these rules, bend them or

ignore them – all with different levels of success (and levels of

retribution, from Google’s web spam team).

White hats do it by the ‘rules’;

black hats ignore the ‘rules’.

What you read in this article is perfectly within the laws and within

the guidelines and will help you increase the traffic to your website

through organic, or natural search engine results pages (SERPS).

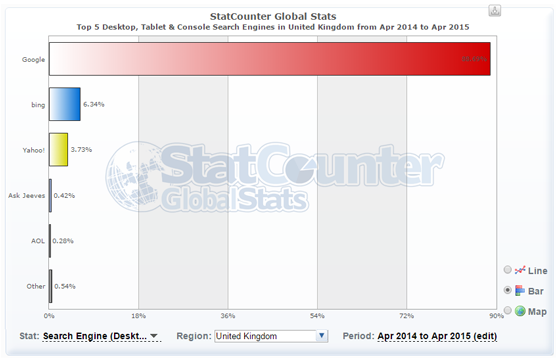

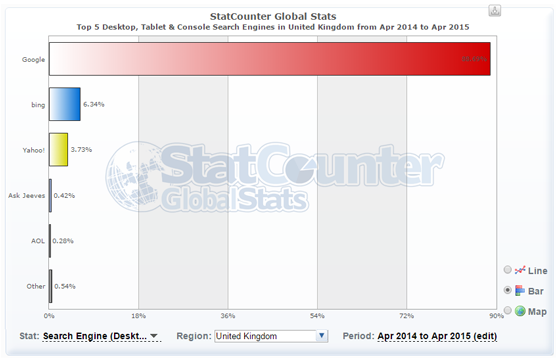

While there are a lot of definitions of SEO (spelled Search engine optimi

sation in the UK, Australia and New Zealand, or search engine optimi

zation

in the United States and Canada) organic SEO in 2015 is mostly about

getting free traffic from Google, the most popular search engine in the

world (and the only game in town in the UK):

The guide you are reading is for the more technical minded.

Opportunity

The art of web seo is understanding how people search for things, and

understanding what type of results Google wants to (or will) display to

it’s users. It’s about putting a lot of things together to look for

opportunity.

A good optimiser has an understanding of how search engines like Google generate their natural SERPS to satisfy users’

NAVIGATIONAL,

INFORMATIONAL and

TRANSACTIONAL keyword queries.

A good search engine marketer has a good understanding of the short

term and long term risks involved in optimising rankings in search

engines, and an understanding of the type of content and sites Google (

especially) WANTS to return in it’s natural SERPS.

The aim of any campaign is increased visibility in search engines.

There are rules to be followed or ignored, risks to be taken, gains to be made, and battles to be won or lost.

A Mountain View spokesman once called the search engine ‘

kingmakers‘, and that’s no lie.

Ranking high in Google is VERY VALUABLE – it’s effectively ‘free advertising’ on the best advertising space in the world.

Traffic from Google natural listings is STILL the most valuable

organic traffic to a website in the world, and it can make or break an

online business.

The state of play STILL is that you can generate your own highly

targeted leads, for FREE, just by improving your website and optimising

your content to be as relevant as possible for a customer looking for

your company, product or service.

As you can imagine, there’s a LOT of competition now for that free traffic – even from Google (!) in some niches.

The Process

The process can successfully practiced in a bedroom or a workplace,

but it has traditionally involved mastering many skills as they arose

including diverse marketing technologies including but not limited to:

- website design

- accessibility

- usability

- user experience

- website development

- php, html, css etc

- server management

- domain management

- copywriting

- spreadsheets

- back link analysis

- keyword research

- social media promotion

- software development

- analytics and data analysis

- information architecture

- looking at Google for hours on end

It takes a lot, in 2015, to rank on merit a page in Google in competitive niches, and

the stick Google is hitting every webmaster with (at the moment, and for the foreseeable future) is the ‘QUALITY USER EXPERIENCE‘ stick.

If you expect to rank in Google in 2015, you’d better have a quality

offering, not based entirely on manipulation, or old school tactics.

Is a visit to your site a good user experience? If not – beware

MANUAL QUALITY RATERS and

BEWARE the GOOGLE PANDA algorithm which is looking for signs of poor user experience and low quality content.

Google raising the ‘quality bar’ ensures a higher level of quality in

online marketing in general (above the very low quality we’ve seen over

the last years).

Success online involves HEAVY INVESTMENT in on page content, website

architecture, usability, conversion to optimisation balance, and

promotion.

If you don’t take that route, you’ll find yourself chased down by Google’s algorithms at some point in the coming year.

This ‘what is seo’ guide (and this entire website) is not about churn and burn type of Google seo (called webspam to Google).

What Is A Successful Strategy?

Get relevant. Get trusted. Get Popular.

It’s is no longer just about manipulation. It’s about adding quality

and often utilitarian content to your website which meet a PURPOSE that

delivers USER SATISFACTION.

If you are serious about getting more free traffic from search

engines, get ready to invest time and effort into your website and

online marketing.

Google wants to rank QUALITY documents in its results, and force

those who want to rank high to invest in great content, or great

service, that attracts editorial links from other reputable websites.

If you’re willing to add a lot of great content to your website, and

create buzz about your company, Google will rank you high. If you try to

manipulate Google, it will penalise you for a period of time, and often

until you fix the offending issue – which we know can LAST YEARS.

Backlinks in general, for instance, are STILL weighed FAR too

positively by Google and they are manipulated to drive a site to the top

positions – for a while. That’s why blackhats do it – and they have the

business model to do it. It’s the easiest way to rank a site, still

today. If you are a real business who intends to build a brand online –

you can’t use black hat methods. Full stop.

Google Rankings Are In Constant Ever-Flux

It’s Google’s job to MAKE MANIPULATING SERPS HARD.

So – the people behind the algorithms keeps ‘moving the goalposts’,

modifying the ‘rules’ and raising ‘quality standards’ for pages that

compete for top ten rankings. In 2015 – we have ever-flux in the SERPS –

and that seems to suit Google and keep everybody guessing.

Google is very secretive about its ‘secret sauce’ and offers

sometimes helpful and sometimes vague advice – and some say offers

misdirection – about how to get more from valuable traffic from Google.

Google is on record as saying the engine is intent on

‘frustrating’ search engine optimisers attempts to improve the amount of

high quality traffic to a website – at least (but not limited to) –

using low quality strategies classed as web spam.

At its core, Google search engine optimisation is about

KEYWORDS and

LINKS. It’s about

RELEVANCE,

REPUTATION and

TRUST. It is about

QUALITY OF CONTENT &

VISITOR SATISFACTION.

A Good USER EXPERIENCE is the end goal.

Relevance, Authority & Trust

Web page optimisation is about making a web page being relevant enough for a query, and being trusted enough to rank for it.

It’s about ranking for valuable keywords for the long term, on merit.

You can play by ‘white hat’ rules laid down by Google, or you can

choose to ignore those and go ‘black hat’ – a ‘spammer’. MOST seo

tactics still work, for some time, on some level, depending on who’s

doing them, and how the campaign is deployed.

Whichever route you take, know that if Google catches you trying to

modify your rank using overtly obvious and manipulative methods, then

they

will class you a web spammer, and your site will be penalised (normally you will not rank high for important keywords).

These penalties can last years if not addressed, as some penalties

expire and some do not – and Google wants you to clean up any

violations.

Google does not want you to try and modify your rank. Critics would

say Google would prefer you paid them to do that using Google Adwords.

The problem for Google is – ranking high in Google organic listings

is a real social proof for a business, a way to avoid ppc costs and

still, simply, the BEST WAY to drive REALLY VALUABLE traffic to a site.

It’s FREE, too, once you’ve met the always-increasing criteria it takes to rank top.

In 2015, you need to be aware that what works to improve your rank

can also get you penalised (faster, and a lot more noticeably).

In particular, the Google web spam team is currently waging a pr war on sites that rely on

unnatural links and

other ‘manipulative’ tactics (and handing out severe penalties if it

detects them) – and that’s on top of many algorithms already designed to

look for other manipulative tactics (like keyword stuffing).

Google is making sure it takes longer to see results from black

and white

hat seo, and intent on ensuring a flux in it’s SERPS based largely on

where the searcher is in the world at the time of the search, and where

the business is located near to that searcher.

There are some things you cannot directly influence legitimately to

improve your rankings, but there is plenty you CAN do to drive more

Google traffic to a web page.

Ranking Factors

Google has HUNDREDS of ranking factors with signals that can change

daily to determine how it works out where your page ranks in comparison

to other competing pages.

You will not ever find them all. Many ranking factors are on page, on

site and some are off page, or off site. Some are based on where you

are, or what you have searched for before.

I’ve been in online marketing for 15 years. In that time, I’ve

learned to focus on optimising elements in campaigns that offer the

greatest return on investment of one’s labour.

Learn SEO Basics….

Here is few simple seo tips to begin with:

- If you are just starting out, don’t think you can fool Google

about everything all the time. Google has VERY probably seen your

tactics before. So, it’s best to keep your plan simple.

GET RELEVANT. GET REPUTABLE. Aim for a good, satisfying visitor

experience. If you are just starting out – you may as well learn how to

do it within Google’s Webmaster Guidelines first.

Make a decision, early, if you are going to follow Google’s guidelines,

or not, and stick to it. Don’t be caught in the middle with an

important project. Do not always follow the herd.

- If your aim is to deceive visitors from Google, in any way, Google

is not your friend. Google is hardly your friend at any rate – but you

don’t want it as your enemy. Google will send you lots of free traffic

though if you manage to get to the top of search results, so perhaps

they are not all that bad.

- A lot of optimisation techniques that are effective in boosting

sites rankings in Google are against Google’s guidelines. For

example: many links that may have once promoted you to the top of

Google, may in fact today be hurting your site and it’s ability to rank

high in Google. Keyword stuffing might be holding your page back…. You

must be smart, and cautious, when it comes to building links to your

site in a manner that Google *hopefully* won’t have too much trouble

with in the FUTURE. Because they will punish you in the future.

- Don’t expect to rank number 1 in any niche for a competitive without

a lot of investment, work. Don’t expect results overnight. Expecting

too much too fast might get you in trouble with the spam team.

- You don’t pay anything to get into Google, Yahoo or Bing natural, or

free listings. It’s common for the major search engines to find your

website pretty easily by themselves within a few days. This is made so

much easier if your website actually ‘pings’ search engines when you

update content (via XML sitemaps or RSS for instance).

- To be listed and rank high in Google and other search engines, you

really should consider and largely abide by search engine rules and official guidelines for inclusion.

With experience, and a lot of observation, you can learn which rules

can be bent, and which tactics are short term and perhaps, should be

avoided.

- Google ranks websites (relevancy aside for a moment) by the number

and quality of incoming links to a site from other websites (amongst

hundreds of other metrics). Generally speaking, a link from a page to

another page is viewed in Google “eyes” as a vote for that page the link

points to. The more votes a page gets, the more trusted a page can

become, and the higher Google will rank it – in theory. Rankings are

HUGELY affected by how much Google ultimately trusts the DOMAIN the page

is on. BACKLINKS (links from other websites – trump every other

signal.)

- I’ve always thought if you are serious about ranking – do so with

ORIGINAL COPY. It’s clear – search engines reward good content it hasn’t

found before. It indexes it blisteringly fast, for a start (within a

second, if your website isn’t penalised!). So – make sure each of your

pages has enough text content you have written specifically for that

page – and you won’t need to jump through hoops to get it ranking.

- If you have original quality content on a site, you also have a

chance of generating inbound quality links (IBL). If your content is

found on other websites, you will find it hard to get links, and it

probably will not rank very well as Google favours diversity in it’s

results. If you have decent original content on your site, you can then let authority websites – those with online business authority – know about it, and they might link to you – this is called a quality backlink.

- Search engines need to understand a link is a link. Links can be designed to be ignored by search engines with the rel nofollow attribute.

- Search engines can also find your site by other web sites linking to it. You can also submit your site to search engines direct,

but I haven’t submitted any site to a search engine in the last 10

years – you probably don’t need to do that. If you have a new site I

would immediately register it with Google Webmaster Tools these days.

- Google and Bing use a crawler (Googlebot and Bingbot) that spiders

the web looking for new links to spider. These bots might find a link to

your home page somewhere on the web and then crawl and index the pages

of your site if all your pages are linked together (in almost any way).

If your website has an xml sitemap, for instance, Google will use that

to include that content in it’s index. An xml site map is INCLUSIVE, not

EXCLUSIVE. Google will crawl and index every single page on your site –

even pages out with an xml sitemap.

- Many think Google will not allow new websites to rank well for

competitive terms until the web address “ages” and acquires “trust” in

Google – I think this depends on the quality of the incoming links.

Sometimes your site will rank high for a while then disappears for

months. A “honeymoon period” to give you a taste of Google traffic, no

doubt.

- Google WILL classify your site when it crawls and indexes your site –

and this classification can have a DRASTIC affect on your rankings –

it’s important for Google to work out WHAT YOUR ULTIMATE INTENT IS – do

you want to classified as an affiliate site made ‘just for Google’, a

domain holding page, or a small business website with a real purpose?

Ensure you don’t confuse Google by being explicit with all the signals

you can – to show on your website you are a real business, and your

INTENT is genuine – and even more importantly today – FOCUSED ON

SATISFYING A VISITOR.

- NOTE – If a page exists only to make money from Google’s free

traffic – Google calls this spam. I go into this more, later in this

guide.

- The transparency you provide on your website in text and links about

who you are, what you do, and how you’re rated on the web or as a

business is one way that Google could use (algorithmically and manually)

to ‘rate’ your website. Note that Google has a HUGE army of quality

raters and at some point they will be on your site if you get a lot of

traffic from Google.

- To rank for specific keyword phrase searches, you generally need to

have the keyword phrase or highly relevant words on your page (not

necessarily altogether, but it helps) or in links pointing to your

page/site.

- Ultimately what you need to do to compete is largely dependent on

what the competition for the term you are targeting is doing. You’ll

need to at least mirror how hard they are competing, if a better

opportunity is hard to spot.

- As a result of other quality sites linking to your site, the site now has a certain amount of real PageRank that

is shared with all the internal pages that make up your website that

will in future help provide a signal to where this page ranks in the

future.

- Yes, you need to build links to your site to acquire more PageRank, or Google ‘juice’ – or what we now call domain authority or trust.

Google is a links based search engine – it does not quite understand

‘good’ or ‘quality’ content – but it does understand ‘popular’ content.

It can also usually identify poor, or THIN CONTENT – and it penalises

your site for that – or – at least – it takes away the traffic you once

had with an algorithm change. Google doesn’t like calling actions the

take a ‘penalty’ – it doesn’t look good. They blame your ranking drops

on their engineers getting better at identifying quality content or

links, or the inverse – low quality content and unnatural links. If they

do take action your site for paid links – they call this a ‘Manual

Action’ and you will get notified about it in Webmaster Tools if you sign up.

- Link building is

not JUST a numbers game, though. One link from a “trusted authority”

site in Google could be all you need to rank high in your niche. Of

course, the more “trusted” links you build, the more trust Google will

have in your site. It is evident you need MULTIPLE trusted links from

MULTIPLE trusted websites to legitimately get the most from Google.

- Try and get links within page text pointing to your site with

relevant, or at least natural looking, keywords in the text link – not,

for instance, in blogrolls or site wide links. Try to ensure the links

are not obviously “machine generated” e.g. site-wide links on forums or

directories. Get links from pages, that in turn, have a lot of links to

them, and you will soon see benefits.

- Onsite, consider linking to your other pages by linking to them within text. I

usually only do this when it is relevant – often, I’ll link to relevant

pages when the keyword is in the title elements of both pages. I don’t

really go in for auto-generating links at all. Google has penalised

sites for using particular auto link plugins, for instance, so I avoid

them.

- Linking to a page with actual key-phrases in the link help a great

deal in all search engines when you want to feature for specific

key-terms. For example; “seo scotland” as opposed

to http://www.hobo-web.co.uk or “click here“. Saying that – in 2015,

Google is punishing manipulative anchor text

very aggressively, so be sensible – and stick to brand links and plain

url links that build authority with less risk. I rarely ever optimise

for grammatically incorrect terms these days (especially links).

- I think the anchor text links in internal navigation is still

valuable – but keep it natural. Google needs links to find and help

categorise your pages. Don’t underestimate the value of a clever

internal link keyword-rich architecture and be sure to understand for

instance how many words Google counts in a link, but don’t overdo it.

Too many links on a page could be seen as a poor user experience. Avoid

lots of hidden links in your template navigation.

- Search engines like Google ‘spider’ or ‘crawl’ your entire site by

following all the links on your site to new pages, much as a human would

click on the links of your pages. Google will crawl and index your

pages, and within a few days normally, begin to return your pages in

SERPS.

- After a while, Google will know about your pages, and keep the ones

it deems ‘useful’ – pages with original content, or pages with a lot of

links to them. The rest will be de-indexed. Be careful – too many low

quality pages on your site will impact your overall site performance in

Google. Google is on record talking about good and bad ratios of quality

content to low quality content.

- Ideally you will have unique pages, with unique page titles and unique page descriptions if

you deem to use the latter. Google does not seem to use the meta

description when actually ranking your page for specific keyword

searches if not relevant and unless you are careful if you might end up

just giving spammers free original text for their site and not yours

once they scrape your descriptions and put the text in main content on

their site. I don’t worry about meta keywords these days as Google and Bing say they either ignore them or use them as spam signals.

- Google will take some time to analyse your entire site, analysing

text content and links. This process is taking longer and longer these

days but is ultimately determined by your domain authority / real

PageRank as Google determines it.

- If you have a lot of duplicate low quality text already found by

Googlebot on other websites it knows about, Google will ignore your

page. If your site or page has spammy signals, Google will penalise it,

sooner or later. If you have lots of these pages on your site – Google

will ignore your efforts.

- You don’t need to keyword stuff your text and look dyslexic to beat the competition.

- You optimise a page for more traffic by increasing the frequency of

the desired key phrase, related key terms, co-occurring keywords and

synonyms in links, page titles and text content. There is no ideal amount of text – no magic keyword density. Keyword stuffing is a tricky business, too, these days.

- I prefer to make sure I have as many UNIQUE relevant words on the

page that make up as many relevant long tail queries as possible.

- If you link out to irrelevant sites, Google may ignore the page, too

– but again, it depends on the site in question. Who you link to, or

HOW you link to, REALLY DOES MATTER – I expect Google to use your

linking practices as a potential means by which to classify your site.

Affiliate sites for example don’t do well in Google these days without

some good quality backlinks.

- Many search engine marketers think who you actually link out to (and

who links to you) helps determine a topical community of sites in any

field, or a hub of authority. Quite simply, you want to be in that hub,

at the centre if possible (however unlikely), but at least in it. I like

to think of this one as a good thing to remember in the future as

search engines get even better at determining topical relevancy of

pages, but I have never really seen any granular ranking benefit (for

the page in question) from linking out.

- I’ve got by, by thinking external links to other sites should

probably be on single pages deeper in your site architecture, with the

pages receiving all your Google Juice once it’s been “soaked up” by the

higher pages in your site structure (the home page, your category

pages). This is old school though – but it still gets me by. I don’t

need to think you really need to worry about that in 2015.

- Original content is king and will attract a “natural link growth” –

in Google’s opinion. Too many incoming links too fast might devalue your

site, but again. I usually err on the safe side – I always aimed for

massive diversity in my links – to make them look ‘more natural’.

Honestly, I go for natural links in 2015 full stop.

- Google can devalue whole sites, individual pages, template generated

links and individual links if Google deems them “unnecessary” and a

‘poor user experience’.

- Google knows who links to you, the “quality” of those links, and

whom you link to. These – and other factors – help ultimately determine

where a page on your site ranks. To make it more confusing – the page

that ranks on your site might not be the page you want to rank, or even

the page that determines your rankings for this term. Once Google has

worked out your domain authority – sometimes it seems that the most

relevant page on your site Google HAS NO ISSUE with will rank.

- Google decides which pages on your site are important or most

relevant. You can help Google by linking to your important pages and

ensuring at least one page is really well optimised amongst the rest of

your pages for your desired key phrase. Always remember Google does not

want to rank ‘thin’ pages in results – any page you want to rank –

should have all the things Google is looking for. PS – That’s a lot

these days!

- It is important you spread all that real ‘PageRank’ – or link equity

– to your sales keyword / phrase rich sales pages, and as much remains

to the rest of the site pages, so Google does not ‘demote’ pages into

oblivion – or ‘supplemental results’ as we old timers knew them back in

the day. Again – this is slightly old school – but it gets me by even

today.

- Consider linking to important pages on your site from your home page, and other important pages on your site.

- Focus on RELEVANCE first. Then, focus your marketing efforts and get

REPUTABLE. This is the key to ranking ‘legitimately’ in Google in 2015.

- Every few months Google changes it’s algorithm to punish sloppy

optimisation or industrial manipulation. Google Panda and Google Penguin

are two such updates, but the important thing is to understand Google

changes its algorithms constantly to control its listings pages (over

600 changes a year we are told).

- The art of rank modification is to rank without tripping these

algorithms or getting flagged by a human reviewer – and that is tricky!

Welcome to the

tightrope that is modern web optimisation.

Read on if you would like to learn how to seo….

Keyword Research is ESSENTIAL

The first step in any professional campaign is to do some keyword research and analysis.

Somebody asked me about this a simple white hat tactic and I

think what is probably the simplest thing anyone can do that guarantees

results.

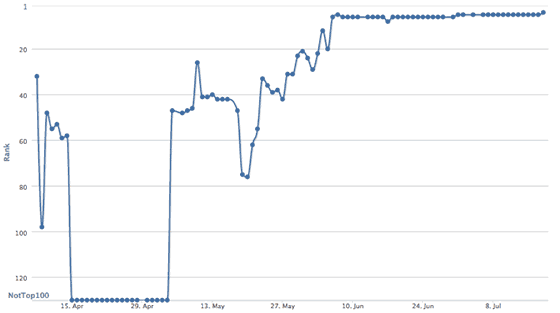

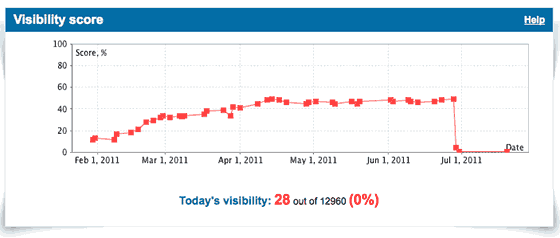

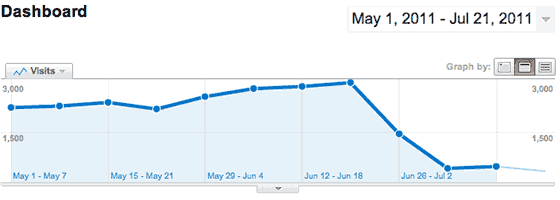

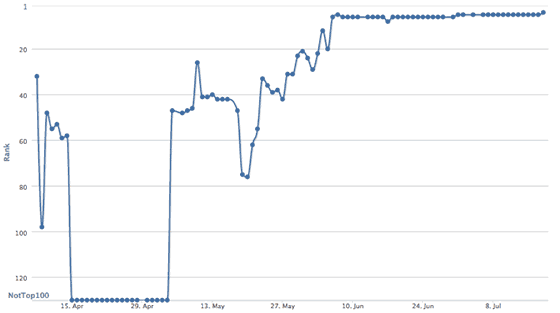

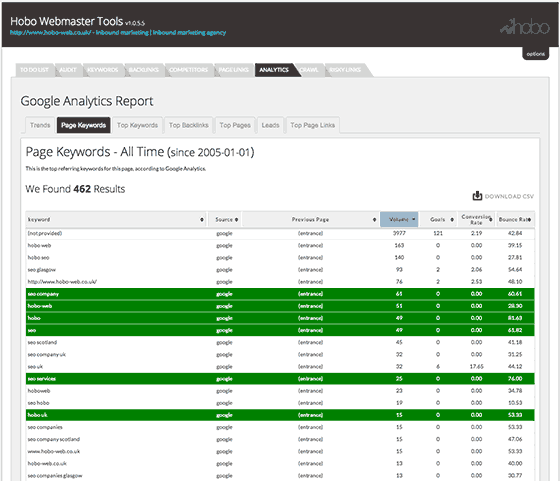

The chart above (from last year) illustrates a reasonably

valuable 4 word term I noticed a page I had didn’t rank high in Google

for, but I thought probably should and could rank for, with this simple

technique.

I thought it as simple as an example to illustrate an aspect of

onpage seo, or ‘rank modification’, that’s white hat, 100% Google

friendly and never, ever going to cause you a problem with Google. This

‘trick’ works with any keyword phrase, on any site, with obvious

differing results based on availability of competing pages in SERPS, and

availability of content on your site.

The keyword phrase I am testing rankings for isn’t ON the page, and I

did NOT add the key phrase…. or in incoming links, or using any

technical tricks like redirects or any hidden technique, but as you can

see from the chart, rankings seem to be going in the right direction.

You can profit from it if you know a little about how Google works

(or seems to work, in many observations, over years, excluding when

Google throws you a bone on synonyms. You can’t ever be 100% certain you

know how Google works on any level, unless it’s data showing your

wrong, of course.)

What did I do to rank number 1 from nowhere for that key phrase?

I added one keyword

to to the page in plain text, because adding the actual ‘keyword

phrase’ itself would have made my text read a bit keyword stuffed for

other variations of the main term. It gets interesting if you

do that to a lot of pages, and a lot of keyword phrases. The important

thing is keyword research – and knowing which unique keywords to add.

This illustrates a key to ‘relevance’ is…. a keyword. The right keyword.

Yes – plenty of other things can be happening at the same time. It’s

hard to identify EXACTLY why Google ranks pages all the time…but you can

COUNT on other things happening and just get on with what you can see

works for you.

In a time of

light optimisation, it’s useful to earn a few terms you SHOULD rank for in simple ways that leave others wondering how you got it.

Of course you can still keyword stuff a page, or still spam your link

profile – but it is ‘light’ optimisation I am genuinely interested in

testing on this site – how to get more with less – I think that’s the

key to not tripping Google’s aggressive algorithms.

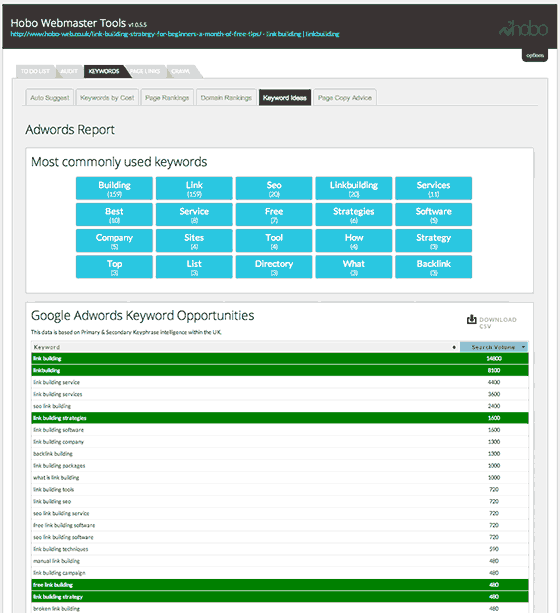

There are many tools on the web to help with basic keyword research (including the Google Keyword Planner

tool and there are

even more useful third party seo tools to help you do this).

You can use many keyword research tools to quickly identify opportunities to get more traffic to a page:

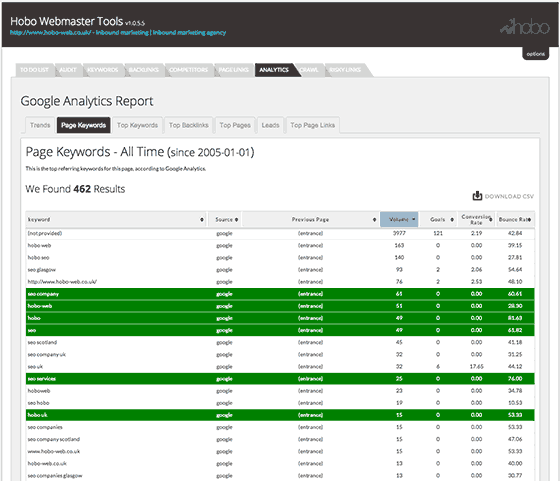

Google Analytics Keyword ‘Not Provided’

Google Analytics was the very best place to look at keyword

opportunity for some (especially older) sites, but that all changed a

few years back.

Google

stopped telling us which keywords are sending traffic to our sites from the search engine back in October 2011, as part of privacy concerns for it’s users.

Google will now begin encrypting searches that people do

by default, if they are logged into Google.com already through a secure

connection. The change to SSL search also means that sites people visit

after clicking on results at Google will no longer receive “referrer”

data that reveals what those people searched for, except in the case of

ads.

Google Analytics now instead displays – keyword “

not provided“, instead.

In Google’s new system, referrer data will be blocked.

This means site owners will begin to lose valuable data that they depend

on, to understand how their sites are found through Google. They’ll

still be able to tell that someone came from a Google search. They

won’t, however, know what that search was. SearchEngineLand

You can still get

some of this data if you sign up for

Google Webmaster Tools (and

you can combine this in Google Analytics) but the data even there is

limited and often not entirely the most accurate. The keyword data can

be useful though – and access to backlink data is essential these days.

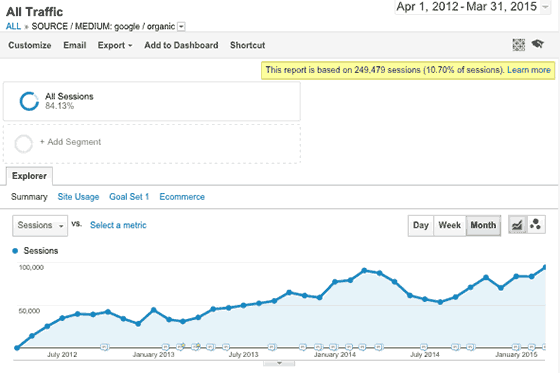

If the website you are working on is an aged site – there’s probably a wealth of keyword data in Google Analytics:

This is another example of Google making ranking in organic

listings HARDER – a change for ‘users’ that seems to have the most

impact on ‘marketers’ outside of Google’s ecosystem – yes – search

engine optimisers.

Now, consultants need to be page centric (abstract, I know), instead

of just keyword centric when optimising a web page for Google. There are

now plenty of third party tools that help when researching keywords but

most of us miss the kind of keyword intelligence we used to have access

to.

Proper keyword research is important because getting a site to the

top of Google eventually comes down to your text content on a page and

keywords in external & internal links. All together, Google uses

these signals to determine where you rank if you rank at all.

There’s no magic bullet, to this.

At any one time, your site is probably feeling the influence of some sort of algorithmic filter (for example, Google Panda or Google Penguin) designed to keep spam sites under control and deliver relevant high quality results to human visitors.

One filter may be kicking in keeping a page down in the SERPS, while

another filter is pushing another page up. You might have poor content

but excellent incoming links, or vice versa. You might have very good

content, but a very poor technical organisation of it.

Try and identify the reasons Google doesn’t ‘rate’ a particular page

higher than the competition – the answer is usually on the page or in

backlinks pointing to the page.

- Do you have too few inbound quality links?

- Do you have too many?

- Does your page lack descriptive keyword rich text?

- Are you keyword stuffing your text?

- Do you link out to irrelevant sites?

- Do you have too many advertisements above the fold?

- Do you have affiliate links on every page of your site, and text found on other websites?

Whatever they are, identify issues and fix them.

Get on the wrong side of Google and your site might well be flagged for MANUAL review – so

optimise your site as if, one day, you will get that website review from a Google Web Spam reviewer.

The key to a successful campaign, I think, is persuading Google that

your page is most relevant to any given search query. You do this by

good unique keyword rich text content and getting “quality” links to

that page. The latter is far easier to say these days than actually do!

Next time your developing a page, consider what looks spammy to you

is probably spammy to Google. Ask yourself which pages on your site are

really necessary. Which links are necessary? Which pages on the site are

emphasised in the site architecture? Which pages would you ignore?

You can help a site along in any number of ways (including making

sure your page titles and meta tags are unique) but be careful. Obvious

evidence of ‘rank modifying’ is dangerous.

I prefer simple seo techniques, and ones that can be measured in some way. I have never

just wanted to

rank for competitive terms;

I have always wanted to understand at least some of the reasons why a

page ranked for these key phrases. I try to create a good user

experience for for humans AND search engines. If you make high quality

text content relevant and suitable for both these audiences, you’ll more

than likely find success in organic listings and you might not ever

need to get into the technical side of things, like redirects and search engine friendly urls.

To beat the competition in an industry where it’s difficult to

attract quality links, you have to get more “technical” sometimes – and

in some industries – you’ve traditionally needed to be 100% black hat to

even get in the top 100 results of competitive, transactional searches.

There are no hard and fast rules to long term ranking success, other

than developing quality websites with quality content and quality links

pointing to it. The less domain authority you have, the more text you’re

going to need. The aim is to build a satisfying website and build real

authority!

You need to mix it up and learn from experience. Make mistakes and

learn from them by observation. I’ve found getting penalised is a very

good way to learn what not to do.

Remember there are exceptions to nearly every rule, and in an ever

fluctuating landscape, and you probably have little chance determining

exactly

why you rank in search engines these days. I’ve been

doing it for over 10 years and everyday I’m trying to better understand

Google, to learn more and learn from others’ experiences.

It’s important not to obsess about granular ranking specifics that

have little return on your investment, unless you really have the time

to do so! THERE IS USUALLY SOMETHING MORE VALUABLE TO SPEND THAT TIME

ON. That’s usually either good backlinks or great content.

Fundamentals

The fundamentals of successful optimisation while refined have not

changed much over the years – although Google does seem a LOT better

than it was at rewarding pages with some reputation signals and

satisfying content / usability.

Google isn’t lying about rewarding legitimate effort – despite what some claim. If they were I would be a black hat full time. So would everybody else trying to rank in Google.

The majority of small to medium businesses do not need advanced

strategies because their direct competition generally has not employed

these tactics either.

Most strategies are pretty simple in construct.

I took a medium sized business to the top of Google recently for very

competitive terms doing nothing but ensuring page titles where

optimised, the home page text was re-written, one or two earned links

from trusted sites.

This site was a couple of years old, a clean record in Google, and a couple of organic links already from trusted sites.

This domain had the authority and trust to rank for some valuable

terms, and all we had to do was to make a few changes on site, improve

the depth and focus of website content, monitor keyword performance and

tweak.

There was a little

duplicate content needing sorting out and a bit of canonicalisation of thin content to resolve, but

none of the measures I implemented I’d call advanced.

A lot of businesses can get more converting visitors from Google simply by following basic principles and best practices:

- always making sure that every page in the site links out to at least one other page in the site

- link to your important pages often

- link not only from your navigation, but from keyword rich text links in text content – keep this natural and for visitors

- try to keep each page element and content unique as possible

- build a site for visitors to get visitors and you just might convert some to actual sales too

- create keyword considered content on the site people will link to

- watch which sites you link to and from what pages, but do link out!

- go and find some places on relatively trusted sites to try and get some anchor text rich inbound links

- monitor trends, check stats

- minimise duplicate or thin content

- bend a rule or two without breaking them and you’ll probably be ok

…. once this is complete it’s time to … add more, and better content

to your site and tell more people about it, if you want more Google

love.

OK, so you might have to implement the odd 301, but again, it’s hardly advanced.

I’ve seen simple seo marketing techniques working for years.

You are better off doing simple stuff better and faster than worrying

about some of the more ‘advanced’ techniques you read on some blogs I

think – it’s more productive, cost effective for businesses and safer,

for most.

Beware Pseudoscience

Pseudoscience is a claim, belief, or practice

posing as science, but which does not constitute or adhere to an

appropriate scientific methodology…

Beware folk trying to bamboozle you with science. This isn’t a science when Google controls the ‘laws’ and changes them at will.

You see I have always thought that optimisation was about:

- looking at Google rankings all night long,

- keyword research

- observations about ranking performance of your own pages and that of others (though not in a controlled environment)

- putting relevant, co-ocurring words you want to rank for on pages

- putting words in links to pages you want to rank for

- understanding what you put in your title, that’s what you are going to rank best for

- getting links from other websites pointing to yours

- getting real quality links that will last from sites that are pretty trustworthy

- publishing lots and lots of content (did I say lots? I meant tons)

- focusing on the long tail of search!!!

- understanding it will take time to beat all this competition

I always expected to get a site demoted by:

- getting too many links with the same anchor text pointing to a page

- keyword stuffing a page

- trying to manipulate google too much on a site

- creating a “frustrating user experience”

- chasing the algorithm too much

- getting links I shouldn’t have

- buying links

Not that any of the above is automatically penalised all the time.

I was always of the mind I don’t need to understand the maths or

science of Google, that much, to understand what Google engineers want.

The biggest challenge these days are to get really trusted sites to link to you, but the rewards are worth it.

To do it, you probably should be investing in some sort of marketable

content, or compelling benefits for the linking party (that’s not just

paying for links somebody else can pay more for). Buying links to

improve rankings WORKS but it is probably THE most hated link building

technique as far as the Google Webspam team is concerned.

I was very curious about about the science of optimisation I studied

what I could but it left me a little unsatisfied. I learned

building links, creating

lots of decent content and learning

how to monitise that content better (whilst not breaking any major TOS of Google) would have been a more worthwhile use of my time.

Getting

better and faster at doing all that would be nice too.

There’s many problems with blogs,too, including mine.

Misinformation is an obvious one. Rarely are your results conclusive

or observations 100% accurate. Even if you think a theory holds water on

some level. I try to update old posts with new information if I think

the page is only valuable with accurate data.

Just remember most of what you read about how Google works from a

third party is OPINION and just like in every other sphere of knowledge,

‘facts’ can change with a greater understanding over time or with a

different perspective.

Chasing The Algorithm

There is no magic bullet and there are no secret formulas to achieve

fast number 1 ranking in Google in any competitive niche WITHOUT

spamming Google.

Legitimately earned high positions in search engines in 2015 takes a lot of hard work.

There are a few less talked about tricks and tactics that are

deployed by some better than others to combat Google Panda, for

instance, but there are no big secrets (no “white hat” secrets anyway).

There is clever strategy, though, and creative solutions to be found to

exploit opportunities uncovered by researching the niche. As soon as

Google sees a strategy that gets results… it usually becomes ‘out with

the guidelines’ and something you can be penalised for – so

beware jumping on the latest fad.

The biggest advantage any one provider has over another is experience

and resource. The knowledge of what doesn’t work and what will actually

hurt your site is often more valuable than knowing what will give you a

short lived boost. Getting to the top of Google is a relatively simple

process. One that is constantly in change. Professional SEO is more a

collection of skills, methods and techniques. It is more a way of doing

things, than a one-size-fits all magic trick.

After over a decade practicing and deploying real campaigns, I’m

still trying to get it down to it’s simplest , most cost effective

processes. I think it’s about doing simple stuff right. From my

experience, this realised time and time again. Good text, simple

navigation structure, quality links. To be relevant and reputable takes

time, effort and luck, just like anything else in the real world, and

that is the way Google want it.

If a company is promising you guaranteed rankings, and has a magic

bullet strategy, watch out. I’d check it didn’t contravene Google’s

guidelines.

How long does it take to see results?

Some results can be gained within weeks and you need to expect some strategies to take months to see the benefit.

Google WANTS these efforts to take time. Critics

of the search engine giant would point to Google wanting fast effective

rankings to be a feature of Googles own Adwords sponsored listings.

Optimisation is not a quick process, and a successful campaign can be

judged on months if not years. Most successful, fast ranking website

optimisation techniques end up finding their way into Google Webmaster

Guidelines – so be wary.

It takes time to build quality, and it’s this quality that Google aims to reward.

It takes time to generate the data needed to begin to formulate a

campaign, and time to deploy that campaign. Progress also depends on

many factors

- How old is your site compared to the top 10 sites?

- How many back-links do you have compared to them?

- How is their quality of back-links compared to yours?

- What the history of people linking to you (what words have people been using to link to your site?)

- How good of a resource is your site?

- Can your site attract natural back-links (e.g. you have good content

or a great service) or are you 100% relying on your agency for

back-links (which is very risky in 2015)?

- How much unique content do you have?

- Do you have to pay everyone to link to you (which is risky), or do you have a “natural” reason why people might link to you?

Google wants to return quality pages in it’s organic listings, and it

takes time to build this quality and for that quality to be recognised.

It takes time too to balance your content, generate quality backlinks and manage your disavowed links.

Google knows how valuable organic traffic is – and they want webmasters investing a LOT of effort in ranking pages.

Critics will point out the higher the cost of expert SEO, the better

looking Adwords becomes, but Adwords will only get more expensive, too.

At some point, if you want to compete online, your going to HAVE to

build a quality website, with a unique offering to satisfy returning

visitors – the sooner you start, the sooner you’ll start to see results.

If you start NOW, and are determined to build an online brand, a

website rich in content with a satisfying user experience – Google will

reward you in organic listings.

ROI

Web optimisation, is a marketing channel just like any other and

there are no guarantees of success in any, for what should be obvious

reasons. There are no guarantees in Google Adwords either, except that

costs to compete will go up, of course.

That’s why it is so attractive – but like all marketing – it is still a gamble.

At the moment, I don’t know you, your business, your website, it’s

resources, your competition or your product. Even with all that

knowledge, calculating ROI is extremely difficult because ultimately

Google decides on who ranks where in it’s it’s results – sometimes

that’s ranking better sites, and sometimes (often) it is ranking sites

breaking the rules above yours.

Nothing is absolute in search. There are no guarantees – despite

claims from some companies. What you make from this investment is

dependant on many things, not least, how suited your website is to

actually convert into the sales the extra visitors you get.

Every site is different.

Big Brand campaigns are far, far different from small business seo

campaigns who don’t have any links to begin with, to give you but one

example.

It’s certainly easier, if the brand in question has a lot of domain

authority just waiting to be awoken – but of course that’s a

generalisation as big brands have big brand competition too. It depends

entirely on the quality of the site in question and the level and

quality of the competition, but smaller businesses should probably look

to own their niche, even if limited to their location, at first.

Local SEO is always a good place to start for small businesses.

There ARE some things that are evident, with a bit of experience on your side:

Page Title Tag Best Practice

<title>What Is The Best Title Tag For Google?</title>

The page title tag (or HTML Title Element) is arguably the most important on page ranking factor (with regards to

web page optimisation).

Keywords in page titles can HELP your pages rank higher in Google

results pages (SERPS). The page title is also often used by Google as

the title of a search snippet link in search engine results pages.

For me, a perfect title tag in Google is dependant on a number of

factors and I will lay down a couple below but I have since expanded

page title advice on another page (link below);

- A page title that is highly relevant to the page it

refers to will maximise it’s usability, search engine ranking

performance and click through satisfaction rate. It will probably be

displayed in a web browsers window title bar, and in clickable search

snippet links used by Google, Bing & other search engines. The title

element is the “crown” of a web page with important keyword phrase featuring AT LEAST ONCE within it.

- Most modern search engines have traditionally placed a lot of importance in the words contained within this html element. A good page title is made up of keyword phrases of value and/or high search volumes.

- The last time I looked Google displayed as many characters as it can

fit into “a block element that’s 512px wide and doesn’t exceed 1 line

of text”. So – THERE BECAME NO AMOUNT OF CHARACTERS any optimiser could lay down as exact best practice to GUARANTEE a title will display, in full in Google, at least, as the search snippet title. Ultimately

– only the characters and words you use will determine if your entire

page title will be seen in a Google search snippet. Recently Google displayed 70 characters in a title – but that changed in 2011/2012.

- If you want to ENSURE your FULL title tag shows in the desktop UK version of Google SERPS, stick to a shorter title of about 55 characters

but that does not mean your title tag MUST end at 55 characters and

remember your mobile visitors see a longer title (in the UK, in March

2015 at least). I have seen ‘up-to’ 69 characters (back in 2012) – but

as I said – what you see displayed in SERPS depends on the characters

you use. In 2015 – I just expect what Google displays to change – so I

don’t obsess about what Google is doing in terms of display.

- Google is all about ‘user experience’ and ‘visitor satisfaction’ in

2015 so it’s worth remembering that usability studies have shown that a

good page title length is about seven or eight words long and fewer than 64 total characters. Longer

titles are less scannable in bookmark lists, and might not display

correctly in many browsers (and of course probably will be truncated in

SERPS).

- Google will INDEX perhaps 1000s of characters in a

title… but I don’t think no-one knows exactly how many characters or

words Google will actually count AS a TITLE when determining relevance

for ranking purposes. It is a very hard thing to try to isolate

accurately with all the testing and obfuscation Google uses to hide it’s

‘secret sauce’. I have had ranking success with longer titles – much

longer titles. Google certainly reads ALL the words in your page title (unless you are spamming it silly, of course).

- You can probably include up to 12 words that will be counted as part of a page title,

and consider using your important keywords in the first 8 words. The

rest of your page title will be counted as normal text on the page.

- NOTE, in 2015, the html title element you choose for your page, may not be what Google chooses to include in your SERP snippet. The search snippet title and description is very much QUERY dependant these days. Google often chooses what it thinks

is the most relevant title for your search snippet, and it can use

information from your page, or in links to that page, to create a

very different SERP snippet title.

- When optimising a title, you are looking to rank for as many terms

as possible, without keyword stuffing your title. Often, the best bet is

to optimise for a particular phrase (or phrases) – and take a more

long-tail approach. Note that too many page titles and not enough actual

page text per page could lead to Google Panda or other ‘user

experience’ performance issues. A highly relevant unique page title is no longer enough to float a page with thin content. Google cares WAY too much about the page text content these days to let a good title hold up a thin page on most sites.

- Some page titles do better with a call to action – a call to action which reflects exactly a searcher’s intent (e.g. to learn something, or buy something, or hire something. Remember this is your hook

in search engines, if Google chooses to use your page title in its

search snippet, and there is a lot of competing pages out there in 2015.

- The perfect title tag on a page is unique to other pages on

the site. In light of Google Panda, an algorithm that looks for a

‘quality’ in sites, you REALLY need to make your page titles UNIQUE, and

minimise any duplication, especially on larger sites.

- I like to make sure my keywords feature as early as possible in a

title tag but the important thing is to have important keywords and key

phrases in your page title tag SOMEWHERE.

- For me, when improved search engine visibility is more important

than branding, the company name goes at the end of the tag, and I use a

variety of dividers to separate as no one way performs best. If you have

a recognisable brand – then there is an argument for putting this at

the front of titles – although Google often will change your title

dynamically – sometimes putting your brand at the front of your snippet

link title itself.

- Note that Google is pretty good these days at removing any special

characters you have in your page title – and I would be wary of trying

to make your title or Meta Description STAND OUT using special

characters. That is not what Google wants, evidently, and they do give

you a further chance to make your search snippet stand out with RICH

SNIPPETS and SCHEMA markup.

- I like to think I write titles for search engines AND humans.

- Know that Google tweaks everything regularly – why not what the perfect title keys off? So MIX it up…

- Don’t obsess. Natural is probably better, and will only get better as engines evolve. I optimise for key-phrases, rather than just keywords.

- I prefer mixed case page titles as I find them more scannable than titles with ALL CAPS or all lowercase.

- Generally speaking, the more domain trust/authority your SITE has in

Google, the easier it is for a new page to rank for something. So bear

that in mind. There is only so much you can do with your page titles –

your websites rankings in Google are a LOT more to do with OFFSITE

factors than ONSITE ones – negative and positive.

- Click through rate is something that is likely measured by Google

when ranking pages (Bing say they use it too, and they now power Yahoo),

so it is really worth considering whether you are best optimising your

page titles for click-through rate or optimising for more search engine

rankings.

- I would imagine keyword stuffing your page titles could be one area Google look at (although I see little evidence of it).

- Remember….think ‘keyword phrase‘ rather than ‘keyword‘, ‘keyword‘ ,’keyword‘… think Long Tail.

- Google will select the best title it wants for your search snippet –

and it will take that information from multiple sources, NOT just your

page title element. A small title is often appended with more

information about the domain. Sometimes, if Google is confident in the

BRAND name, it will replace it with that (often adding it to the

beginning of your title with a colon, or sometimes appending the end of

your snippet title with the actual domain address the page belongs to).

A Note About Title Tags;

When you write a page title, you have a chance right at the beginning

of the page to tell Google (and other search engines) if this is a spam

site or a quality site – such as – have you repeated the keyword 4

times or only once? I think title tags, like everything else, should

probably be as simple as possible, with the keyword once and perhaps a

related term if possible.

I always aim to keep my html page title elements things as simple, and looking as human-generated and unique, as possible.

I’m certainly cleaning up the way I write my titles all the time. How do you do it?

More Reading:

External Links

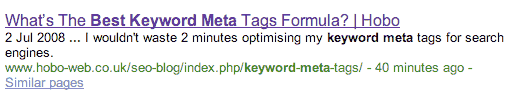

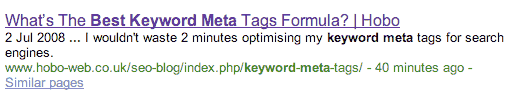

Meta Keywords Best Practice

A hallmark of shady natural search engine optimisation companies –

the meta-keywords tag. Companies that waste time and resources on these

items waste clients money – that’s a fact:

<meta name="Keywords" content="s.e.o., search engine optimisation, optimization">

I have one piece of advice with the meta keyword tag, which like the

title tag, goes in the head section of your web page,

forget about them.

If you are relying on meta-keyword optimisation to rank for terms,

your dead in the water. From what I see, Google + Bing ignores meta

keywords – or at least places no weight in them to rank pages. Yahoo may

read them, but really, a search engine optimiser has more important

things to worry about than this nonsense.

What about other search engines that use them? Hang on while I submit

my site to those 75,000 engines first [sarcasm!]. Yes, 10 years ago

early search engines liked looking at your meta-keywords. I’ve seen OPs

in forums ponder which is the best way to write these tags – with

commas, with spaces, limiting to how many characters. Forget about

meta-keyword tags – they are a pointless waste of time and bandwidth.

Could probably save a rain-forest with the bandwidth costs we save if

everybody removed their keyword tags.

Tin Foil Hat Time

So you have a new site….. you fill your home page meta tags with the

20 keywords you want to rank for – hey, that’s what optimisation is all

about, isn’t it? You’ve just told Google by the third line of text what

to sandbox you

for . The meta name=”Keywords” was actually

originally for words that weren’t actually on the page that would help

classify the document.

Sometimes competitors might use the information in your keywords to determine what you are trying to rank for, too….

If everybody removed them and stopped abusing meta keywords Google

would probably start looking at them but that’s the way of things in

search engines.

I 100% ignore meta keywords and remove them from pages I work on.

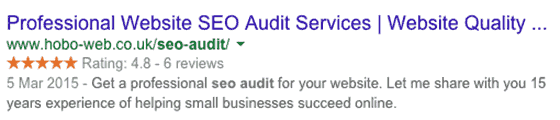

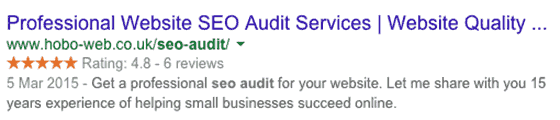

Meta Description Best Practice

Like the

title element and

unlike the

meta keywords tag, this one is important, both from a human and search engine perspective.

<meta name="Description" content="Get your site on the first page of Google,

Yahoo and Bing. Call us on 0845 094 0839. A company based in Scotland." />

Forget whether or not to put your keyword in it, make it relevant to a

searcher and write it for humans, not search engines. If you want to

have this 20 word snippet which accurately describes the page you have

optimised for one or two keyword phrases when people use Google to

search, make sure the keyword is in there.

I must say, I normally do include the keyword in the description as

this usually gets it in your serp snippet, but I think it would be a

fair guess to think more trusted sites would benefit more from any boost

a keyword in the meta description tag might have, than an untrusted

site would.

Google looks at the description but there is debate whether it

actually uses the description tag to rank sites. I think they might at

some level, but again, a very weak signal. I certainly don’t know of an

example that clearly shows a meta description helping a page rank.

Some times, I will ask a question with my titles, and answer it in the description, sometimes I will just give a hint;

That is a lot more difficult in 2015 as search snippets change depending on what Google wants to emphasise to its users.

It’s also very important in my opinion to have

unique title tags and

unique meta descriptions on every page on your site.

It’s a preference of mine, but I don’t generally autogenerate

descriptions with my cms of choice either – normally I’ll elect to

remove the tag entirely before I do this, and my pages still do well

(and Google generally pulls a decent snippet out on it’s own which you

can then go back and optimise for SERPS. There are times when I do

autogenerate descriptions and that’s when I can still make them unique

to the page using using some sort of server-side php.

Tin Foil Hat Time

Sometimes I think if your titles are spammy, your keywords are

spammy, and your meta description is spammy, Google might stop right

there – even they probably will want to save bandwidth at some

time. Putting a keyword in the description won’t take a crap site to

number 1 or raise you 50 spots in a competitive niche – so why optimise

for a search engine when you can optimise for a human? – I think that is

much more valuable, especially if you are in the mix already – that is –

on page one for your keyword.

So, the meta description tag is important in Google, Yahoo and Bing

and every other engine listing – very important to get it right. Make it

for humans.

Oh and by the way – Google seems to truncate anything over @156

characters in the meta description, although this may actually be

limited by pixel width in 2015.

More Reading:

External Links

Robots Meta Tag

Thus far I’ve theorised about the

Title Element, the

Meta Description Tag and Meta Keywords Tag. Next:

The Robots Meta Tag;

<meta name="robots" content="index, nofollow" />

I could use the above meta tag to tell Google to

index the page but not to

follow any links on the page, if for some reason, I did not want the page to appear in Google search results.

By default, Googlebot will index a page and follow links

to it. So there’s no need to tag pages with content values of INDEX or

FOLLOW. GOOGLE

There are various instructions you can make use of in your Robots

Meta Tag, but remember Google by default WILL index and follow links, so

you have NO need to include that as a command –

you can leave the robots meta out completely – and probably should if you don’t have a clue.

Googlebot understands any combination of lowercase and uppercase. GOOGLE.

Valid values for Robots Meta Tag ”

CONTENT” attribute are: “

INDEX“, “

NOINDEX“, “

FOLLOW“, “

NOFOLLOW“. Pretty self explanatory.

Examples:

- META NAME=”ROBOTS” CONTENT=”NOINDEX, FOLLOW”

- META NAME=”ROBOTS” CONTENT=”INDEX, NOFOLLOW”

- META NAME=”ROBOTS” CONTENT=”NOINDEX, NOFOLLOW”

- META NAME=”ROBOTS” CONTENT=”NOARCHIVE”

- META NAME=”GOOGLEBOT” CONTENT=”NOSNIPPET”

Google will understand the following and interprets the following robots meta tag values:

- NOINDEX – prevents the page from being included in the index.

- NOFOLLOW – prevents Googlebot from following any links on the page.

(Note that this is different from the link-level NOFOLLOW attribute,

which prevents Googlebot from following an individual link.)

- NOARCHIVE – prevents a cached copy of this page from being available in the search results.

- NOSNIPPET – prevents a description from appearing below the page in the search results, as well as prevents caching of the page.

- NOODP – blocks the Open Directory Project description of the page

from being used in the description that appears below the page in the

search results.

- NONE – equivalent to “NOINDEX, NOFOLLOW”.

Robots META Tag Quick Reference

| Terms |

Googlebot |

Slurp |

BingBot |

Teoma |

| NoIndex |

YES |

YES |

YES |

YES |

| NoFollow |

YES |

YES |

YES |

YES |

| NoArchive |

YES |

YES |

YES |

YES |

| NoSnippet |

YES |

NO |

NO |

NO |

| NoODP |

YES |

YES |

YES |

NO |

| NoYDIR |

NO |

YES |

NO |

NO |

| NoImageIndex |

YES |

NO |

NO |

NO |

| NoTranslate |

YES |

NO |

NO |

NO |

| Unavailable_After |

YES |

NO |

NO |

NO |

I’ve included the robots meta tag in my tutorial as this IS one of

only a few meta tags / html head elements I focus on when it comes to

managing Googlebot and Bingbot. At a page level – it is a powerful way

to control if your pages are returned in search results pages.

These meta tags go in the [HEAD] section of a [HTML] page and

represent the only tags for Google I care about. Just about everything

else you can put in the [HEAD] of your HTML document is quite

unnecessary and maybe even pointless (for Google optimisation, anyway).

If you are interested in using methods like on-page robots

instructions and the robots.txt file to control which pages get indexed

by Google and how Google treats them,

Sebastian knows a lot more than me.

External Links

H1-H6: Headers

I can’t find any definitive proof online that says you need to use

Heading Tags (H1, H2, H3, H4, H5, H6) or that they improve rankings in

Google, and I have seen pages do well in Google without them – but I do

use them, especially the H1 tag on the page.

For me it’s another piece of a ‘perfect’ page, in the traditional sense, and I try to build a site for Google and humans.

<h1>This is a page title</h1>

I still generally

only use one <h1> heading tag in my keyword targeted pages – I believe this is the way the

W3C intended

it be used in HTML4 – and I ensure they appear at the top of a page

above relevant page text and written with my main keywords or keyword

phrases incorporated.

I have never experienced any problems using CSS to control the appearance of the heading tags making them larger or smaller.

You can use multiple H1s in HTML5, but most sites I find I work on still use HTML4.

I use as many H2 – H6 as is necessary depending on the size of the page, but generally I use H1, H2 & H3. You can see here

how to use header tags properly (basically just be consistent, whatever you do, to give your users the best user experience).

How many words in the H1 Tag? As many as I think is sensible – as short and snappy as possible usually.

I also discovered Google will use your Header tags as page titles at some level if your title element is malformed.

As always be sure to make your heading tags highly relevant to the content on that page and not too spammy, either.

How Many Words & Keywords?

I get asked this all the time –

how much text do you put on a page to rank for a certain keyword?

The answer is there is

no optimal amount of text per page, but

how much text you’ll ‘need’ will be based on your DOMAIN AUTHORITY,

your TOPICAL RELEVANCE and how much COMPETITION there is for that term,

and HOW COMPETITIVE that competition actually is.

Instead of thinking about the

quantity of the text, you should think more about the

quality of the content on the page. Optimise this with searcher intent in mind. Well, that’s how I do it.

I don’t find that you need a minimum amount of words or text to rank

in Google. I have seen pages with 50 words out rank pages with 100, 250,

500 or 1000 words. Then again I have seen pages with no text rank on

nothing but inbound links or other ‘strategy’. In 2015, Google is a lot

better at hiding away those pages, though.

At the moment, I prefer long form pages with a lot of text although

although I still rely heavily on keyword analysis to make my pages. The

benefits of longer pages are that they are great for long tail key

phrases. Creating deep,information rich pages really focuses the mind

when it comes to producing authoritative, useful content.

Every site is different. Some pages, for example, can get away with

50 words because of a good link profile and the domain it is hosted on.

For me, the important thing is to make a page relevant to a user’s

search query.

I don’t care how many words I achieve this with and often I need to

experiment on a site I am unfamiliar with. After a while, you get an

idea how much text you need to use to get a page on a certain domain

into Google.

One thing to note – the more text you add to the page, as long as it

is unique, keyword rich and relevant, the more that page will be

rewarded with more visitors from Google.

There is no optimal number of words on a page for placement in

Google. Every website – every page – is different from what I can see.

Don’t worry too much about word count if your content is original and

informative. Google will probably reward you on some level – at some

point – if there is lots of unique text on all your pages.

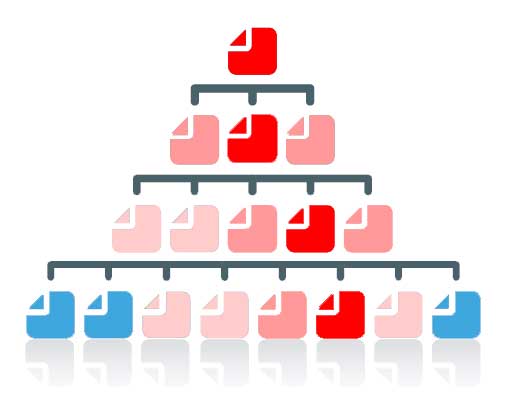

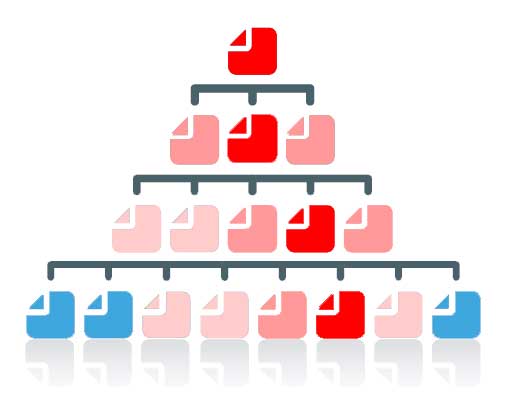

TIP: The ‘

inverted pyramid‘ – pictured above – is useful when creating pages for the web too – very useful.

Keyword Density?

The short answer to this is –

no.

There is no one-size-fits-all keyword density,

no

optimal percentage guaranteed to rank any page at number 1. However, I

do know you can keyword stuff a page and trip a spam filter.

Most web optimisation professionals agree there is no ideal percent

of keywords in text to get a page to number 1 in Google. Search engines

are not that easy to fool, although the key to success in many fields

doing simple things well (or at least better than the competition).

I write natural page copy where possible always focused on the key

terms – I never calculate density in order to identify the best % –

there are way too many other things to work on. I have looked into this.

If it looks natural, it’s ok with me.

Normally I will try and get related terms in the page, and if I have 5

paragraphs, I might have the keyword in 4 or 5 of those as long as it

doesn’t look like I stuffed them in there.

Optimal keyword density is a myth, although there are many who would argue otherwise.

Internal Links To Relevant Pages

I

link to relevant internal pages in my site when necessary.

I silo any relevance or trust mainly though links in text content and

secondary menu systems and between pages that are relevant in context

to one another.

I don’t worry about perfect silo’ing techniques any more, and don’t

worry about whether or not I should link to one category from another,

as I think the ‘boost’ many proclaim is minimal on the size of sites I

usually manage.

I do not obsess about site architecture as much as I used to…. but I

always ensure my pages I want indexed are all available from a crawl

from the home page – and I still emphasise important pages by linking to

them where relevant. I always aim to get THE most important exact match

anchor text pointing to the page from internal links – but I avoid

abusing internals and avoid overtly manipulative internal links that are

not grammatically correct, for instance..

There’s no set method I find works for every site, other than to

link to related internal pages often without overdoing it and where appropriate.

What Are Google Sitelinks?

When Google knows enough about the history of a website (or web

page), it will sometimes display what are called sitelinks (or mega

sitelinks) under the domain of the website in question. This is normally

triggered when Google is confident this is the site you are looking

for, based on the search terms you used.

At one time in the past you could activate for these for some generic

terms based on building links to the domain (or if the site had a lot

of domain authority), but in 2015, site links are usually reserved for

navigational queries with a heavy brand bias, a brand name or a company

name, for instance, or the website address.

I’ve tracked the evolution of Google sitelinks in organic listings

over the years, and they are seemly picked based on a number of factors,

a few of which I have observed.

How To Get Google Sitelinks?

Pages that appear in the blue sitelinks are often popular pages on

your site, in terms of internal or external links, or even recent posts

that may have been published on your blog. Google likes to seem to mix

this up a lot, perhaps to offer some variety, and probably to obfuscate

results to minimise or discourage manipulation.

Sometimes it returns pages that leave me scratching my head as to why Google selected a particular page appears.

If you don’t HAVE site links, have a bit of patience and focus on

other areas of your web marketing, like adding more content, get some PR

or social activity focussed on the site. Google WILL give you sitelinks

on some terms, ONCE it’s convinced your site is the destination for

most people typing that term in. That could be a week or months, but the

more popular the site is, the more likely Google will catch up fast.

Sitelinks are not something can be switched on or off, although you

can control to some degree which pages are presented as sitelinks. You

can do that in Google Webmaster Tools.

Link Out To Related Sites

With regard to on page seo best practices, I usually link out to

other quality relevant pages on other websites where possible and where a

human would find it valuable.

I don’t link out to other sites from homepage. I want all the PR residing in the home page to be shared only with my internal pages.

I don’t like out to other sites from my category pages either, for the same reason.

I link to other relevant sites (a deep link where possible)

from individual pages and I do it often, usually. I don’t worry about link equity or PR leak because I control it on a page to page level.

This works for me, it allows me to share the link equity I have with

other sites while ensuring it is not at the expense of pages on my own

domain. It may even help get me into a

‘neighbourhood’ of relevant sites, especially when some of those start linking back to my site.

Linking out to other sites, especially using a blog, also helps tell

others that might be interested in your content that your page is